We are in a new and interesting legal world. Although to date, no US court cases have used brain-based lie detection techniques as evidence, several cases have sought such evidence and settled out of court. fMRI is the most frequent type of brain-based lie detection technology, with two companies,

Cephos and

No Lie MRI providing this service in the legal domain. There have also been attempts made to use EEG for deception detection. Notably, such a technique was used in part to

prosecute a young woman for murder in India in 2008.

I am far from the first to point out that this technology is highly exploratory and not accurate enough to be used in the court of law. My goal here is to outline a good number of the reasons this is the case.

9. We do not know how accurate these techniques are. Although the two aforementioned companies boast lie detection accuracy rates of 90%+, these cannot be independently verified by an independent lab as the methods used by these companies are trade secrets. For example, there are

few peer-reviewed studies of the putative EEG-based marker of deception, the P300, and most come from the lab that is commercially involved with a

company trying to sell the technique as a product. Interestingly, an

independent lab studying the effect of countermeasures on the technique found an 82% hit rate in controls (not the 99% accuracy claimed by the company), and this was reduced to 18% when countermeasures were used!

8. In the academic literature, where we do have access to methodology, we are limited to testing typical research participants: undergraduate psychology majors (although see

this). For a lie detection method to be valid, it would need to be shown as accurate in a wide variety of populations, varying in age, education, drug use, etc. This population is not likely to be skilled in deception as a career criminal might, and it has been

shown that the more often one lies, the easier it is to lie. Most fMRI-based lie detection techniques are based on the assumption that lying is hard to do, and thus requires the brain to use more energy. If frequent lying makes lying easy, then it could be the case that practiced liars don't have this pattern of brain activity.

Although a fair amount has been made lately about

WEIRD subjects, participants in these studies are actually beyond WEIRD: they are almost exclusively right handed, and predominantly male.

7. Along this same line, the "lies" that are told in these studies rarely have an impact on the lives of the student participants. Occasionally, an extra reward is given if the participant is able to "trick" the system, but in the real world, with reputations and civil liberties at stake, one might imagine that one might do a better job at tricking the scanner. However, being instructed to lie about a low-stakes laboratory situation is not the same as the high-stress situations where this technology would be used in real-life.

Occasionally, a study will try to ameliorate this situation by using a mock crime (such as a theft) as the deceptive stimuli. However, these are also of limited use as participants know that the situation is contrived.

6. Like traditional polygraph tests, it is possible to fool brain-based lie detection systems with countermeasures. Indeed, in an article in press at

NeuroImage, Ganis and colleagues found that deliberate countermeasures on the part of their participants dropped deception detection from 100% to 30%. Most studies of fMRI lie detection have found more brain activation for lies than truth, suggesting that it is more difficult for participants to lie. However, is this still the case with well-rehearsed lies? What about subjects performing mental arithmetic during truth to fool the scanner?

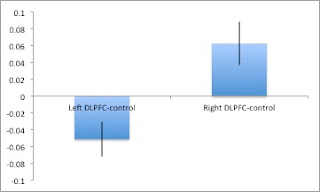

5. A general lack of consistency in the findings in the academic literature. To date, there are ~25 published, peer-reviewed studies of deception and fMRI. Of these studies there are at least as many brain areas implicated in deception, including the

anterior prefrontal area, ventromedial prefrontal area, dorsolateral prefrontal area, parahippocampal areas, anterior cingulate, left posterior cingulate, temporal and subcortical caudate, right precuneous, left cerebellum, insula, putamen, caudate, thalamus, and various regions of temporal cortex! Of course, we know better than to believe that there is some dedicated "lying region" of the brain, and given the diversity of deception tasks (everything from "lie about this playing card" to "lie about things you typically do during the day"), the diversity of regions is not surprising. However, the lack of replication is a cause for concern, particularly when we are applying science to issues of civil liberties.

4. An additional issue surrounds the fact that many of these studies are not properly balanced. In other words, participants are instructed to lie more or less often than they are instructed to tell the truth.

3. There is a large difference between group averages and finding deception within an individual. Knowing that on average, brain region X is significantly more active in a group of subjects during deception than during truth does not tell you than for subject 2 on trial 9 than deception was likely to occur due to the differences in activation. Of course,

some studies are trying to study this level of analysis, but right now they are the majority.

2. Some things that we think that are not true are not necessarily lies. Most of us believe we are above-average drivers, and smarter and more attractive than most even when these beliefs are not true. Memories, even so-called "

flash-bulb" memories are not

fool proof.

1. Are all lies equivalent to the brain? Are lies about knowledge of a crime the same in the brain as white lies such as "no, honey those pants don't make you look fat" or lies of omission or self-deceiving lies?